I’m struggling to understand how Turnitin’s AI checker works and whether it’s reliable for detecting AI-generated content. I recently received a high AI score on a paper I wrote myself, and now I’m worried it wasn’t accurate. Has anyone else had this issue or know how to make sure my writing isn’t flagged incorrectly? Looking for advice or experiences!

Turnitin’s AI checker is… let’s just say ‘new and still finding its groove.’ There’s a lot of skepticism in academia right now because the technology behind AI detection (especially in tools like Turnitin’s) is fundamentally based on pattern recognition—looking at sentence structure, word choices, and statistical quirks that often ‘feel’ AI-like.

Thing is, that stuff is FAR from perfect. Plenty of people—myself included—have gotten flagged for writing totally original content just because maybe we wrote like a robot that day, or used short sentences, or even just summarized research in a weirdly organized way. Honestly, the false positive rate is high enough to cause legit stress among students and educators. You aren’t alone—even published authors and tenured professors have gotten flagged when running their own work!

Reliability? At best, it’s spotty. AI detectors are notorious for sketchy accuracy, especially as bots like ChatGPT and the new Bing get ridiculously good at writing like humans. Plus, the detectors themselves can’t exactly tell your intention; they’re just crunching probabilities. If you got a high AI score, it doesn’t mean you cheated—it just means algorithms think your writing “looks” robotic. But so do a lot of legitimate essays.

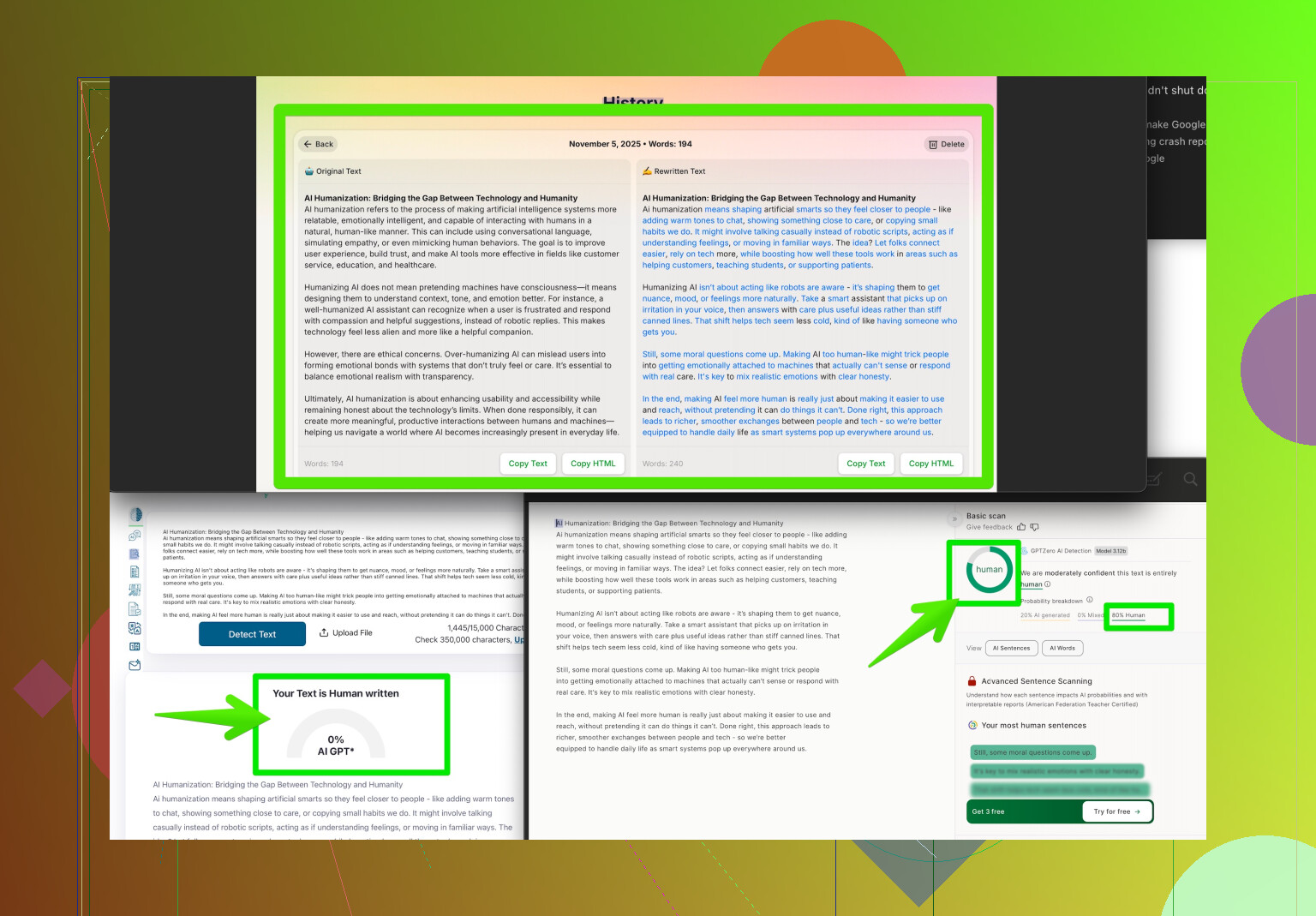

There are ways to decrease this risk. Tools like Clever AI Humanizer are designed to take honest work and rephrase it in a way that scores lower on AI detection tools make your writing sound uniquely human. It’s definitely worth a try if you’re worried about these oversensitive checkers.

Bottom line: Take Turnitin’s AI detector with a big grain of salt. Double check with your instructor if you’re wrongly flagged, and remember—the tech is imperfect, and you don’t have to take its “opinion” as gospel.

Not to throw shade at @ombrasilente, but I sorta disagree on just how unreliable Turnitin’s AI checker is—I don’t think it’s only “spotty,” it’s just flat-out bad at doing what it promises sometimes. Seriously, I’ve seen essays get flagged just because the writer used straightforward language or followed a template (you know, like literally every undergrad ever). I even had my journal reflection pinged as “97% AI-generated,” which is kinda wild considering it was basically me complaining about my weekend.

Here’s the core problem: these detectors (Turnitin included) aren’t actually “detecting” AI like catching someone with a smoking gun. They’re playing percentages, looking for sentences that don’t have “enough” complexity or that string thoughts together in a way that feels machine-made. Of course, half the time that’s just how people with anxiety write, or when you’re trying to be super clear.

Sure, AI rephrase tools like Clever AI Humanizer can help if you’re desperate, but honestly, you shouldn’t have to run your own writing through a “make me sound human” filter. That’s dystopian, right? But it’s the world we’re in.

Bottom line: never trust the high AI score as gospel. Document your writing process (drafts, notes, whatever), and if Turnitin tries to burn you at the stake, show your receipts. Also, push back with your instructor or dept—these tools are best used as one data point, not the whole story. Basically, don’t let a checker’s false alarm make you doubt yourself. Imagine if Shakespeare got flagged because he wrote too many iambic pentameters, lol.

If you’re looking for ways real people are handling these issues, check out viewing tips from Reddit on making your writing appear less robotic. There’s some solid crowdsourced advice there beyond just trusting in glitchy tech.

Let’s break it down. Turnitin’s AI checker has become a stress magnet, but there’s a reason nobody in this thread can say it’s “reliable.” Both @boswandelaar and @ombrasilente laid out how it often pings human writing for robotic traits—sometimes because you use basic structure, sometimes because your style is just clear and direct (or, real talk, maybe you’re tired and writing like a spreadsheet). You can write totally unique content, and it’ll still see “AI ghosts” all over your essays. That’s not reliability, that’s roulette.

If you go hunting for solutions, you’ll see suggestions like Clever AI Humanizer. Here’s the honest scoop:

Pros:

- Makes your prose more “human” to detectors—varies sentence length, injects natural quirks.

- Can lower a suspicious AI score and buy some peace of mind.

- Easy to use; paste, tweak, done.

Cons:

- Could mess with your authentic voice if overused.

- Still not a guarantee—there’s always a risk detectors go haywire for other reasons.

- Philosophical cringe: having to “humanize” already human work? Weird times.

Alternatives? Some mention rewriting manually or using tools from heavy academic forums (which is essentially just spending longer getting the same headache). And sure, you can document your drafts and process, as suggested by others here, but sometimes instructors are just as confused by the flag as you are.

Bottom line: Detectors are still imperfect. Tools like Clever AI Humanizer might help you get past the initial algorithmic gatekeeper, but the real power move is keeping proof of your drafts and calmly challenging any flags. Don’t rewrite your personality for a bot. And remember—every AI detector struggles with nuance, especially as language models get cleverer.

If you have to pick a techy workaround, Clever AI Humanizer is one of the more user-friendly options, with the caveat that no tool is foolproof. Transparency with your instructor is still your best defense.